Disentangled Representations for Short-Term and Long-Term Person Re-identification

** NeurIPS 2019 / TPAMI accepted **

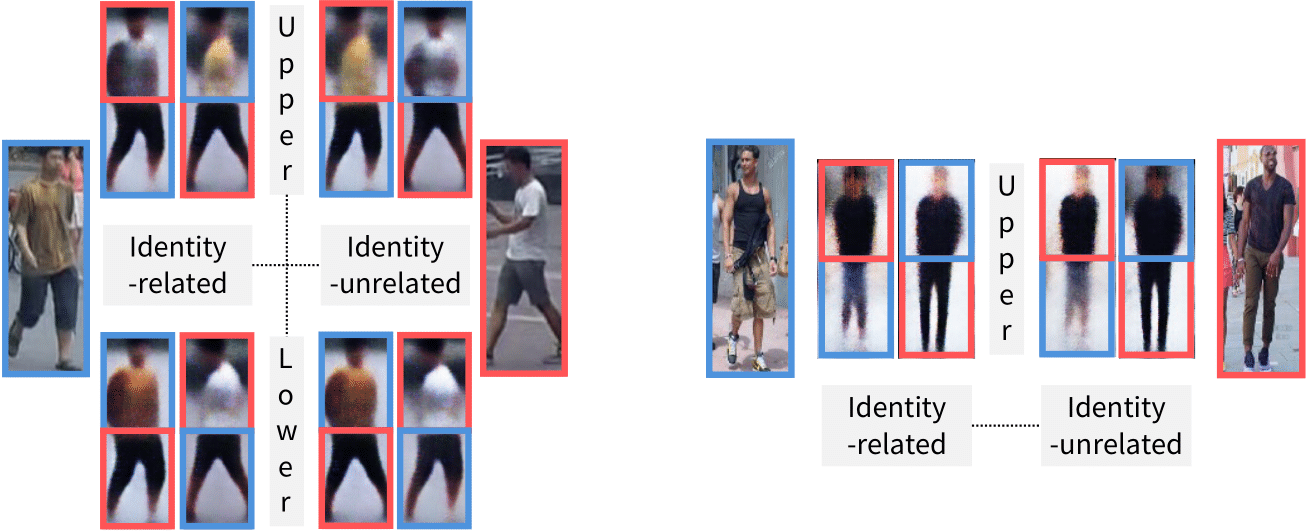

Fig. Visual comparison of identity-related and -unrelated features. We generate new person images by interpolating (top) identity-related features and (bottom) identity-unrelated ones between two images, while fixing the other ones. We can see that identity-related features encode, e.g., clothing and color, and identity-unrelated ones involve, e.g., human pose and background clutter. Note that we disentangle these features using identification labels only.

Authors

Abstract

We address the problem of person re-identification (reID), that is, retrieving person images from a large dataset, given a query image of the person of interest. A key challenge is to learn person representations robust to intra-class variations, as different persons could have the same attribute, and persons’ appearances look different, e.g., with viewpoint changes. Recent reID methods focus on learning person features discriminative only for a particular factor of variations (e.g., human pose), which also requires corresponding supervisory signals (e.g., pose annotations). To tackle this problem, we propose to factorize person images into identity-related and -unrelated features. Identity-related features contain information useful for specifying a particular person (e.g., clothing), while identity-unrelated ones hold other factors (e.g., human pose). To this end, we propose a new generative adversarial network, dubbed identity shuffle GAN (IS-GAN). It disentangles identity-related and -unrelated features from person images through an identity-shuffling technique that exploits identification labels alone without any auxiliary supervisory signals. We restrict the distribution of identity-unrelated features, or encourage the identity-related and -unrelated features to be uncorrelated, facilitating the disentanglement process. Experimental results validate the effectiveness of IS-GAN, showing state-of-the-art performance on standard reID benchmarks, including Market-1501, CUHK03 and DukeMTMC-reID. We further demonstrate the advantages of disentangling person representations on a long-term reID task, setting a new state of the art on a Celeb-reID dataset.

Framework

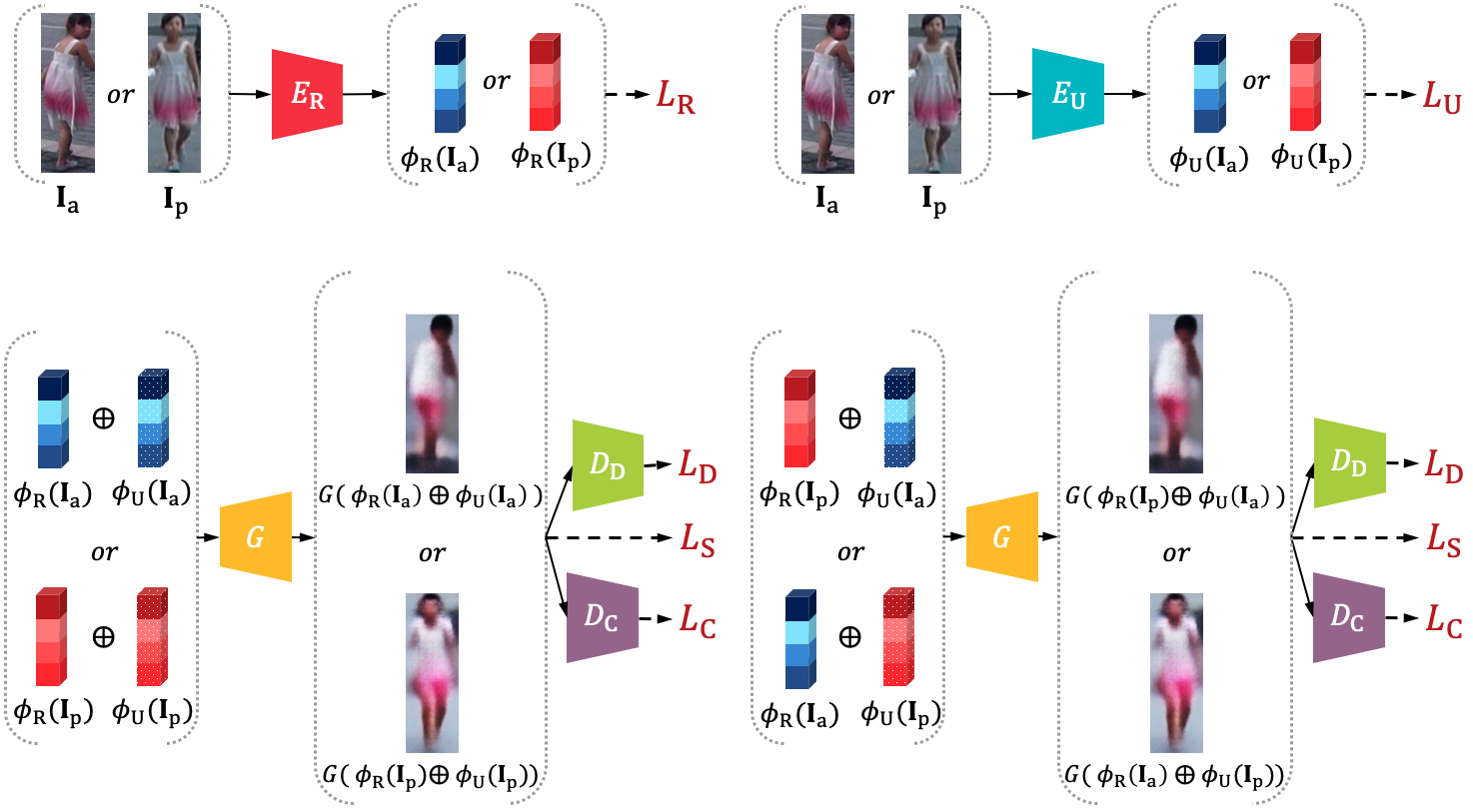

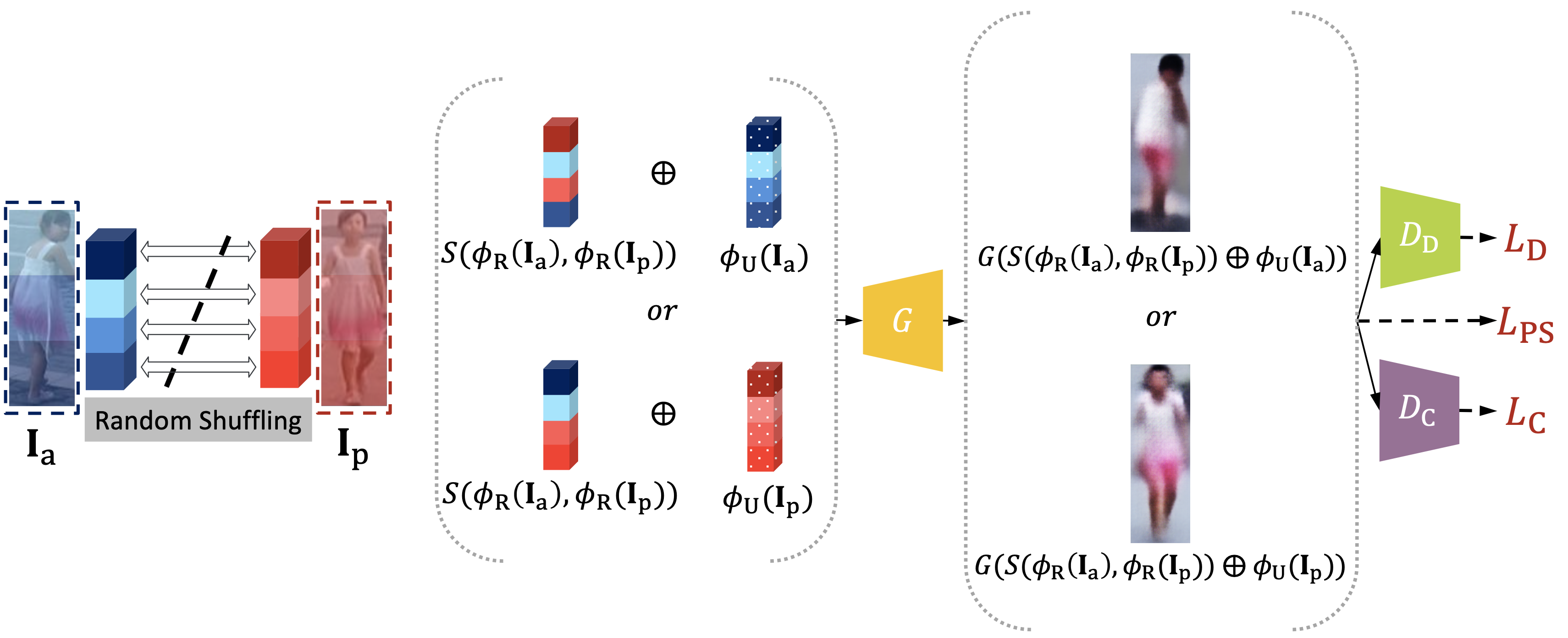

We disentangle identity-related and -unrelated features from person images. To this end, our model learns to generate the same images as the inputs while preserving the identities, using disentangled and identity shuffled ones.

Results

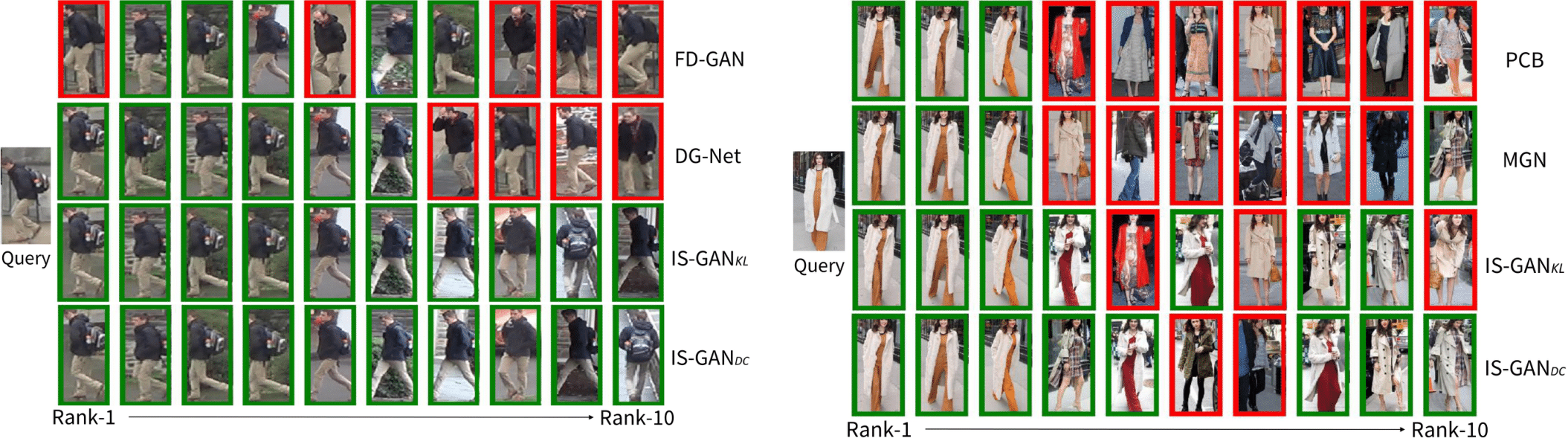

Visual comparison of retrieval results on (left) short-term and (right) long-term reID. We compute the Euclidean distances between identity-related features of query and gallery images, and visualize top-10 results sorted according to the distance. The results with green boxes have the same identity as the query, while those with red boxes do not.

Examples of generated images using a part-level identity shuffling technique on (left) short-term and (right) long-term reID tasks.

Citation

[Paper] [Code]@inproceedings{eom2019learning,

author = "C. Eom and B. Ham",

title = "Learning Disentangled Representation for Robust Person Re-identification",

booktitle = "Advances in Neural Information Processing Systems",

year = "2019",

}

[Code will be updated soon]

@article{eom2021disentangled,

author = "C. Eom and W. Lee and G. Lee and B. Ham",

title = "Disentangled Representations for Short-Term and Long-Term Person Re-identification",

journal = "IEEE Transactions on Pattern Analysis and Machine Intelligence",

}

Acknowledgements

This research was supported by R&D program for Advanced Integrated-intelligence for Identification (AIID) through the National Research Foundation of KOREA(NRF) funded by Ministry of Science and ICT (NRF-2018M3E3A1057289), and Yonsei University Research Fund of 2021 (2021-22-0001).